Artificial intelligence is not yet ready to go through humans

In the video calls before Sunday of Superbowl, Amazon flooded social networks with flirty advertisements that mocked the “new body of Alexa”

The business of the day of the game shows the fantasy of a woman from the AI voice assistant embodied by actor Michael B. Jordan, who seduces all the whims of her, for the consternation of her husband of her increasingly angry of her, VentureBeat reported.

The new line of spouse replacement robots, but the reality is that the incarnate and similar to human can be closer than you think.

HOY in the day, the avatars of IA, that is, the rendering with a body and/or digital face, lack Michael B’s sexual attractiveness. Most, in fact, are frankly creepy.

Meet Robby Megabyte, Bosnia and Herzegovina’s first robotic rock musician

Research shows that imbuing robots with characteristics similar to humans makes them love us, to a certain extent. Beyond that threshold, the more human it looks like a system, the more paradoxically we feel repulsed.

Name: “The Uncanny Valley. Masahiro Mori, the roboticist who coined the term, predicted a climax beyond the Eerie Valley when robots can no longer be distinguished from humans and seduce us again.

As you can imagine, on a video call such a robot could deceive us that it is human: remodeling of the old text-based Turing test in the 21st century.

I did one on a recent zoom with legendary marketer Guy Kawasaki’s bold proclamation: in two years, Guy would no longer be able to distinguish between me and my company’s conversation AI, Kuki, on a video call. Guy’s eyebrows rose at the claim, and reservations began to fall from my big, fat mouth.

Maybe on a quick video call. With low bandwidth. If he was drinking champagne and dialing himself in from a bubble bath, like the lady in the Alexa ad.

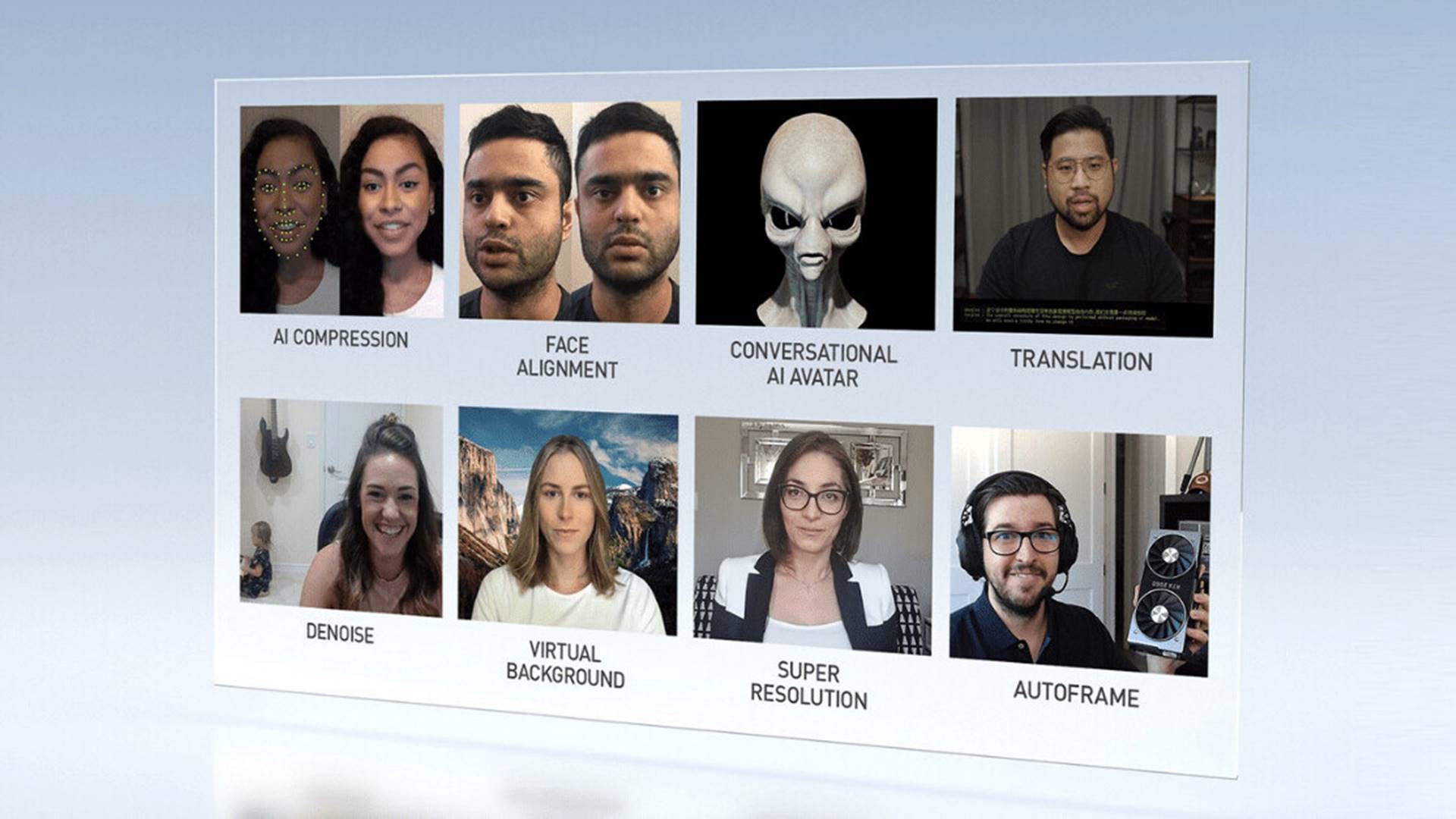

So let this be my public mea culpa and a more informed prediction. An AI that is good enough to make a video call as a human needs five key technologies that run in real-time:

-

A humanlike avatar

-

A humanlike voice

-

Humanlike emotions

-

Humanlike movement

-

Humanlike conversation

Avatars have come a long way lately, thanks to the widespread and inexpensive availability of motion capture technology (“MoCap”) and generative adversarial neural networks (“GANs”), the machine learning technology that underlies Deep Fakes.MoCap allows actors to play puppet characters through haptic suits that originally took the huge budget of films like Avatar are now accessible to anyone with an iPhone X and free game engine software. Numerous online web services are making deeply fake, low-resolution images and videos trivial and democratizing technology that, if left untested, could be a death knell to democracy.

Such advances have spawned new industries, from Japanese VTubers (a rising trend in the US recently co-opted by PewDiePie) to fake “AI” influences like Lil ‘Miquela Das supposed to virtualize talent but secretly rely on human models behind leave the scenes.

With the announcement last week by Epic Games’ MetaHuman creator (supplier of Fortnite and the Unreal Engine in an industry) (t 2020 surpassing movies and sports combined), everyone will soon be able to create an infinite number of photorealistic fake faces Free to create and puppet.

Technology that enables human-like voices is also advancing rapidly. Amazon, Microsoft, and Google offer consumable cloud text-to-speech (TTS) APIs that are supported by neural networks and increasingly generate human-like speech. Tools for creating custom language fonts that mimic a human actor using recorded example sentences are also readily available. Speech synthesis, such as the now highly accurate speech recognition of its counterpart, will only improve further with more computing power and training data.

But a convincing AI voice and a convincing face are worthless if expressions do not match. Computer vision through the front camera has shown promise for deciphering human facial expressions, and out-of-the-box APIs can analyze the sentiment of the text.

Labs like NTT Data have shown imitation of human gestures and expressions in real-time, and Magic Leap’s MICA made fun of attractive non-verbal avatar expressions.

However, mirroring a human is one thing; building an AI with its own seemingly autonomous mental and emotional state is another challenge.

To avoid what Dr Ari Shapiro calls the uncanny valley of behavior, the AI needs to display human-like movements that correspond to its “state of mind” and that are procedurally and dynamically triggered as the conversation develops.

Shapiro’s work in USC’s ICT lab was groundbreaking in this field, along with startups like Speech Graphics, whose technology supports lip-syncing and facial expressions for game characters. Such systems take an avatar’s text utterance, analyze the mood, and use rules, sometimes coupled with machine learning, to assign an appropriate animation from a library, with more R&D and ML, procedural animation can be seamless in two years.

Human-like conversation is the last and most difficult piece of the puzzle. While chatbots can deliver business value in a confined space, most still struggle to have a basic conversation. Deep learning + more data + more computational power have so far failed to make significant breakthroughs in understanding natural language versus other areas of AI such as speech synthesis and computer vision.

Hitting the Books: AI medical doctors and the risks tiered hospital treatment

The idea of human-like AI is deeply sexy (valued at $ 320 million and count); But at least for the next few years, until the key components are “resolved,” it will likely remain a fantasy. And when the avatar improvements exceed other advances, our expectations will rise – but so will our disappointment when the pretty faces of the virtual assistants are missing the EQ and the brain fit together.

So it’s probably too early to speculate when a robot might fool a human over video calls, especially given the fact that machines haven’t really passed the traditional text-based Turing test yet.

Possibly a more important question than (when?) Can we create human-like AI is: should we? Do the possibilities – for interactive media characters, for AI companions in health care, for training or education – outweigh the dangers? And does human-like AI necessarily mean “able to exist as a human” or should we strive to have as many industries as possible? Insiders agree that clearly non-human stylized beings should bypass the Eerie Valley.

As a lifelong sci-fi freak, I have always longed for a super AI buddy who was human enough to joke with me and hope for the right regulation – starting with the basic laws that all AIs themselves identify as such – this technology will lead to a net positive for humanity.

Or at least a coin-operated celebrity doppelganger like Michael B. to read you romance novels until your Audible free trial expires.