Why AI is so power-hungry

It takes a lot of energy for machines to be trained with data sets.

This month, Google compelled out a distinguished AI ethics researcher after she voiced frustration with the organization for making her withdraw a studies paper. The paper talked about the dangers of language-processing synthetic intelligence, the kind utilized in Google Search, and different textual content evaluation products.

Among the dangers is the massive carbon footprint of growing this form of AI technology. By a few estimates, schooling an AI version generates an awful lot of carbon emissions because it takes to construct and power 5 vehicles over their lifetimes.

I am a researcher who researches and develops AI fashions, and I am all too acquainted with the skyrocketing electricity and economic fees of AI studies. Why have AI fashions emerge as so energy-hungry, and the way are they specific from conventional information middle computation?

Related Posts

Today’s schooling is inefficient

Traditional information processing jobs completed in information facilities encompass video streaming, electronic mail, and social media. AI is greater computationally in-depth as it wishes to examine thru masses of information till it learns to apprehend it—that is, is skilled.

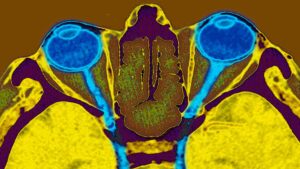

This schooling could be very inefficient in comparison to how human beings learn. Modern AI uses synthetic neural networks, which might be mathematical computations that mimic neurons inside the human mind. The electricity of connection of every neuron to its neighbor is a parameter of the community referred to as weight. To discover ways to apprehend language, the community begins off evolved with random weights and adjusts them till the output is of the same opinion with the perfect answer.

A not unusual place manner of schooling a language community is via way of means of feeding it masses of textual content from web sites like Wikipedia and information shops with a number of the phrases masked out, and asking it to wager the masked-out phrases. An instance is “my canine is cute,” with the word “cute” masked out. Initially, the version receives all of them wrong, however, after many rounds of adjustment, the relationship weights begin to alternate and select up styles in the information. The community sooner or later turns into accurate.

One current version referred to as Bidirectional Encoder Representations from Transformers (BERT) used three. three billion phrases from English books and Wikipedia articles. Moreover, at some stage in schooling, BERT examines this information set now no longer as soon as, however forty instances. To compare, a mean baby getting to know to speak may pay attention forty five million phrases via way of means of age 5, three,000 instances fewer than BERT.

Looking for the proper shape

What makes language fashions even greater highly-priced to construct is this schooling method occurs normally at some stage in the direction of improvement. This is due to the fact researchers need to discover the high-quality shape for the community—what number of neurons, what number of connections among neurons, how rapid the parameters have to be converting at some stage in getting to know, and so on. The greater mixtures they try, the higher the threat that the community achieves an excessive accuracy. Human brains, in contrast, do now no longer want to discover the most advantageous shape—they arrive with a prebuilt shape that has been honed via way of means of evolution.

As agencies and teachers compete inside the AI space, the stress is on to enhance the kingdom of the art. Even reaching a 1 percentage development inaccuracy on tough obligations like gadget translation is taken into consideration giant and ends in excellent exposure and higher products. But to get that 1 percentage development, one researcher may educate the version lots of instances, every time with a specific shape, till the high-quality one is observed.

Researchers at the University of Massachusetts Amherst expected the electricity fee of growing AI language fashions via way of means of measuring the energy intake of not unusual place hardware used at some stage in schooling. They observed that schooling BERT as soon as has the carbon footprint of a passenger flying a spherical experience among New York and San Francisco. However, via way of means of looking the usage of specific structures—that is, via way of means of schooling the set of rules a couple of instances at the information with barely specific numbers of neurons, connections and different parameters—the fee have become the equal of 315 passengers, or a whole 747 jet.

Bigger and hotter

AI fashions also are an awful lot larger than they want to be and developing large each yr. A greater current language version much like BERT referred to as GPT-2, has 1.five billion weights in its community. GPT-three, which created a stir this yr due to its excessive accuracy, has a hundred seventy-five billion weights.

Researchers found that having large networks ends in higher accuracy, although most effective a tiny fraction of the community finally ends up being useful. Something comparable occurs in children’s brains when neuronal connections are first delivered after which reduced, however, the organic mind is an awful lot greater electricity green than computers.

AI fashions are skilled in specialized hardware like pics processor devices, which draw greater energy than conventional CPUs. If you very own a gaming laptop, it in all likelihood has the sort of pics processor devices to create superior pics for, say, gambling Minecraft RTX. You can also additionally observe that they generate plenty of greater warmth than normal laptops.

All of which means that growing superior AI fashions is including as much as a massive carbon footprint. Unless we transfer to one hundred percent renewable electricity sources, AI development may also stand at odds with the dreams of reducing greenhouse emissions and slowing down weather alternate. The economic fee of improvement is likewise turning into so excessive that just a few pick labs can have enough money to do it, and they may be those to set the schedule for what varieties of AI fashions get developed.

Doing greater with less

What does this suggest for the destiny of AI studies? Things won’t be as bleak as they look. The fee of schooling may come down as greater green schooling techniques are invented. Similarly, even as information middle electricity use become anticipated to blow up in current years, this has now no longer took place because of enhancements in information middle efficiency, greater green hardware, and cooling.

There is likewise a trade-off between the fee of schooling the fashions and the fee of the usage of them, so spending greater electricity at schooling time to provide you with a smaller version may clearly make the usage of them cheaper. Because a version might be used normally in its lifetime, that could upload as much as massive electricity savings.

In my lab’s studies, we had been searching at approaches to make AI fashions smaller via way of means of sharing weights, or the usage of the equal weights in a couple of components of the community. We name these shapeshifter networks due to the fact a small set of weights may be reconfigured into a bigger community of any form or shape. Other researchers have proven that weight-sharing has higher performance in an equal quantity of schooling time.

Looking forward, the AI network has to make investments greater in growing electricity-green schooling schemes. Otherwise, it dangerous to have AI emerge as ruled via way of means of a pick few who can have enough money to set the schedule, along with what varieties of fashions are developed, what varieties of information are used to educate them, and what the fashions are used for.

Arstechnica.com / TechConflict.Com