How artificial data may save AI

AI is facing many crucial challenges. Not only will it want large amounts of knowledge to deliver correct results

AI is facing many crucial challenges. Not only will it want large amounts of knowledge to deliver correct results, however, it conjointly must be able to make sure that data isn’t biased, and it needs to fits progressively restrictive data privacy regulations, told VentureBeat.

We’ve seen several solutions planned over the last number of years to handle these challenges — together with varied tools designed to spot and scale back bias, tools that anonymize user data, and programs to make sure that data is merely collected with user consent. however, every one of those solutions is facing challenges of its own.

Now we’re seeing a brand new business emerge that guarantees to be a saving grace: synthetic knowledge. artificial data is artificial computer-generated data that will relieve data obtained from the important world.

A synthetic dataset must have equivalent mathematical and applied mathematics properties as the real-world dataset its replacement however doesn’t expressly represent real individuals. consider this as a digital mirror of real-world data that’s statistically reflective of that world.

This allows coaching AI systems in an exceedingly fully virtual realm. And it is often promptly custom-built for a range of use cases starting from health care to retail, finance, transportation, and agriculture.

There’s a significant movement happening on this front. More than fifty vendors have already developed artificial knowledge solutions, in line with an analysis last June by StartUs Insights.

I’ll define a number of the leading players in an exceedingly moment. First, though, let’s take a more in-depth look into the issues they’re promising to solve.

Why Big Tech is going through regulatory threats from Australia to Arizona

The bother with real data

Over a previous couple of years, there has been increasing concern about however inherent biases in datasets will unknowingly result in AI algorithms that bear on systemic discrimination.

In fact, Gartner predicts that through 2022, 85% of AI comes will deliver incorrect outcomes because of bias in data, algorithms, or the groups chargeable for managing them.

The proliferation of AI algorithms has also diode to growing issues over knowledge privacy.

In turn, this has led to stronger shopper data privacy and protection laws within the EU with GDPR, yet as U.S. jurisdictions together with Golden State and most recently Virginia.

These laws offer customers more management over their personal data.

For example, the Virginia law grants consumers the right to access, correct, delete, and acquire a replica of non-public data as well on choose of the sale of personal data and to deny recursive access to private data for the needs of targeted advertising or identification of the consumer.

By restricting access to this information, a definite amount of individual protection is gained however at the price of the rule’s effectiveness.

The additional knowledge Associate in Nursing AI algorithm will train on, the more correct and effective the results can be. while not access to ample data, the upsides of AI, love aiding with medical diagnoses and drug research, may even be limited.

One various often wont to offset privacy issues is anonymization.

Personal data, for example, are often anonymized by masking or eliminating distinguishing characteristics such as removing names and MasterCard numbers from eCommerce transactions or removing identifying content from health care records. however, there’s growing proof that even if knowledge has been anonymized from one source, it is often related to shopper datasets exposed from security breaches.

In fact, by combining data from multiple sources, it’s doable to create a surprisingly clear picture of our identities notwithstanding there has been a degree of anonymization. In some instances, this will even be done by correlating data from public sources, while not a wicked security hack.

Synthetic data’s solution

Synthetic data guarantees to deliver the benefits of AI without the downsides. Not solely will it take our real personal data out of the equation, however a general goal for artificial data is to perform better than real-world knowledge by correcting bias that is usually engrained within the real world.

Although ideal for applications that use personal data, artificial data has different use cases, too.

One example is complicated laptop vision modeling wherever several factors act in real-time. Artificial video datasets leverage advanced diversion engines are often created with the hyper-realistic representational process to portray all the doable eventualities in Associate in Nursing autonomous driving scenario, whereas making an attempt to shoot photos or videos of the important world to capture these events would be impractical, perhaps impossible, and sure dangerous.

These synthetic datasets will dramatically speed up and improve the coaching of autonomous driving systems.

Perhaps ironically, one in an exceedingly the first tools for building artificial data is that the same one wont to produce Deepfake videos. each building uses generative adversarial networks (GAN), a try of neural networks.

One network generates the synthetic data and also the second try to notice if it’s real. this can be operated in a loop, with the generator network up the standard of the info till the differentiator cannot tell the distinction between real and synthetic.

The rising ecosystem

Forrester’s analysis is recently known several critical technologies, together with synthetic data, which will comprise what they view as “AI 2.0,” advances that radically expand AI possibilities.

By more fully anonymizing data and correcting for inherent biases, yet as making data that will rather be tough to obtain, artificial data may become the state of grace for several massive data applications.

Synthetic data also comes with another big benefit: you’ll produce datasets quickly and infrequently with the info labeled for supervised learning. And it doesn’t have to be compelled to be clean and maintained the method real data does. So, on paper at least, it comes with some massive time and price savings.

Several well-established corporations are among those who generate synthetic data.

IBM describes this as data fabrication, creating synthetic check knowledge to eliminate the danger of tip outflow and address GDPR and regulative issues.

AWS has developed in-house synthetic data tools to get datasets for coaching Alexa on new languages.

And Microsoft has developed a tool in collaboration with Harvard with an artificial data capability that enables for exaggerated collaboration between analysis parties.

Withal these examples, it’s still a period of time for synthetic data and also the developing market is being led by the startups.

To wrap up, let’s take a glance at a number of the first leaders during this rising industry. The list is built supported by my very own research and business analysis organizations including G2 and StartUs Insights.

- AiFi — Uses synthetically generated data to simulate retail stores and shopper behavior.

- AI.Reverie — Generates synthetic data to train computer vision algorithms for activity recognition, object detection, and segmentation. Work has included wide-scope scenes like smart cities, rare plane identification, and agriculture, along with smart-store retail.

- Anyverse — Simulates scenarios to create synthetic datasets using raw sensor data, image processing functions, and custom LiDAR settings for the automotive industry.

- Cvedia — Creates synthetic images that simplify the sourcing of large volumes of labeled, real, and visual data. The simulation platform employs multiple sensors to synthesize photo-realistic environments resulting in empirical dataset creation.

- DataGen — Interior-environment use cases, like smart stores, in-home robotics, and augmented reality.

- Diveplane — Creates synthetic ‘twin’ datasets for the healthcare industry with the same statistical properties of the original data.

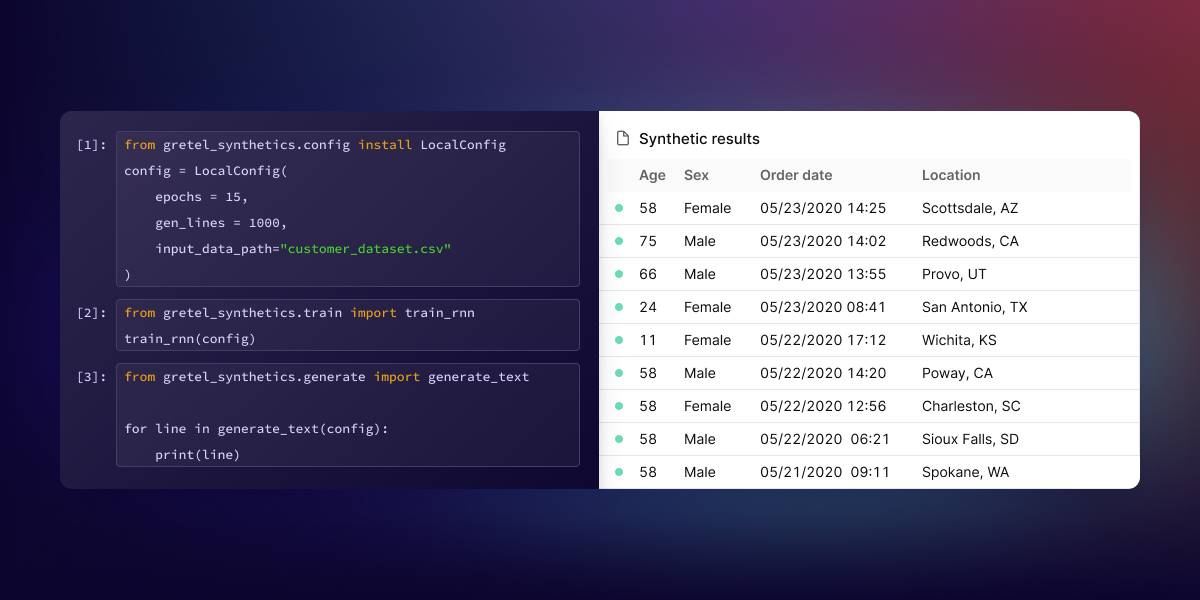

- Gretel — Aiming to be GitHub equivalent for data, the company produces synthetic datasets for developers that retain the same insights as to the original data source.

- Hazy — generates datasets to boost fraud and money laundering detection to combat financial crime.

- Mostly AI — Focuses on insurance and finance sectors and was one of the first companies to create synthetic structured data.

- OneView – Develops virtual synthetic datasets for analysis of earth observation imagery by machine learning algorithms.