Machine learning needs a culture change: AI research survey finds

The gadget getting to know the network, especially inside the fields of laptop imaginative and prescient and language processing has a records subculture problem. That’s in keeping with a survey of studies into the network’s dataset series and use practices posted in advance this month.

What’s wished is a shift far from reliance on the big, poorly curated datasets used to teach gadgets getting to know fashions. Instead, the look recommends a subculture that cares for the folks that are represented in datasets and respects their privateness and assets rights. But in today’s gadgets getting to know the environment, survey authors said, “whatever goes.”

“Data and its (dis)contents: A survey of dataset improvement and use in gadget getting to know” turned into written with the aid of using University of Washington linguists Amandalynne Paullada and Emily Bender, Mozilla Foundation fellow Inioluwa Deborah Raji, and Google studies scientists Emily Denton and Alex Hanna. The paper concluded that big language fashions incorporate the potential to perpetuate prejudice and bias in opposition to a number of marginalized groups and that poorly annotated datasets are a part of the problem.

The paintings additionally require extra rigorous records control and documentation practices. Datasets made this manner will certainly require extra time, money, and attempt however will “inspire paintings on strategies to gadget getting to know that cross past the modern paradigm of strategies idolizing scale.”

“We argue that fixes that consciousness narrowly on enhancing datasets with the aid of using making them extra consultant or extra-tough would possibly pass over the extra widespread factor raised with the aid of using those critiques, and we’ll be trapped in a sport of dataset whack-a-mole as opposed to making progress, as long as notions of ‘progress’ are in large part described with the aid of using overall performance on datasets,” the paper reads. “Should this come to pass, we expect that gadget getting to know as an area can be higher located to apprehend how its generation influences humans and to layout answers that paintings with constancy and fairness of their deployment contexts.”

Related Posts

Events during the last 12 months have added to mild the gadget getting to know the network’s shortcomings and frequently harmed humans from marginalized groups. After Google fired Timnit Gebru, an incident Googlers talk over with as a case of “unparalleled studies censorship,” Reuters mentioned on Wednesday that the business enterprise has commenced sporting out evaluations of studies papers on “touchy topics” and that on at the least 3 occasions, authors were requested to now no longer positioned Google generation in a poor mild, in keeping with inner communications and those acquainted with the matter. And but a Washington Post profile of Gebru this week discovered that Google AI leader Jeff Dean had requested her to analyze the poor effect of big language fashions this fall.

In conversations approximately GPT-three, coauthor Emily Bender formerly informed VentureBeat she desires to see the NLP network prioritize right science. Bender turned into co-lead creator of a paper with Gebru that turned into added to mild in advance this month after Google fired Gebru. That paper tested how using big language fashions can affect marginalized groups. Last week, organizers of the Fairness, Accountability, and Transparency (FAccT) convention every day the paper for publication.

Also remaining week, Hanna joined colleagues at the Ethical AI crew at Google and despatched a be aware of Google leadership traumatic that Gebru is reinstated. On an identical day, contributors of Congress acquainted with algorithmic bias despatched a letter to Google CEO Sundar Pichai’s traumatic answers.

The business enterprise’s selection to censor AI researchers and hearthplace Gebru may also deliver coverage implications. Right now, Google, MIT, and Stanford are a number of the maximum lively or influential manufacturers of AI studies posted at main annual educational conferences. Members of Congress have proposed a law to defend in opposition to algorithmic bias, whilst professionals referred to as accelerated taxes on Big Tech, in component to fund impartial studies. VentureBeat lately spoke with six professionals in AI, ethics, and law approximately the approaches Google’s AI ethics meltdown should have an effect on coverage.

Earlier this month, “Data and its (Dis)contents” obtained an award from organizers of the ML Retrospectives, Surveys, and Meta-analyses workshop at NeurIPS, an AI studies convention that attracted 22,000 attendees. Nearly 2,000 papers have been posted at NeurIPS this 12 months, along with paintings associated with failure detection for safety-essential systems; strategies for faster, extra green backpropagation; and the beginnings of a challenge that treats weather extrude as a gadget getting to know the grand challenge.

Another Hanna paper, supplied on the Resistance AI workshop, urges the gadget to get to know network to cross past scale while thinking about the way to address systemic social problems and asserts that resistance to scale wondering is wished. Hanna spoke with VentureBeat in advance this 12 months approximately using essential race concept while thinking about subjects associated with race, identity, and fairness.

In herbal language processing in latest years, networks made the usage of the Transformer neural community structure and an increasing number of big corpora of records have racked up excessive overall performance marks in benchmarks like GLUE. Google’s BERT and derivatives of BERT led the manner, accompanied by the aid of using networks like Microsoft’s MT-DNN, Nvidia’s Megatron, and OpenAI’s GPT-three. Introduced in May, GPT-three is the most important language version to date. A paper approximately the version’s overall performance gained one in all 3 quality paper awards given to researchers at NeurIPS this 12 months.

The scale of big datasets makes it difficult to very well scrutinize their contents. This ends in repeated examples of algorithmic bias that go back obscenely biased consequences approximately Muslims, folks that are queer or do now no longer agree to predicted gender identity, folks that are disabled, women, and Black humans, amongst different demographics.

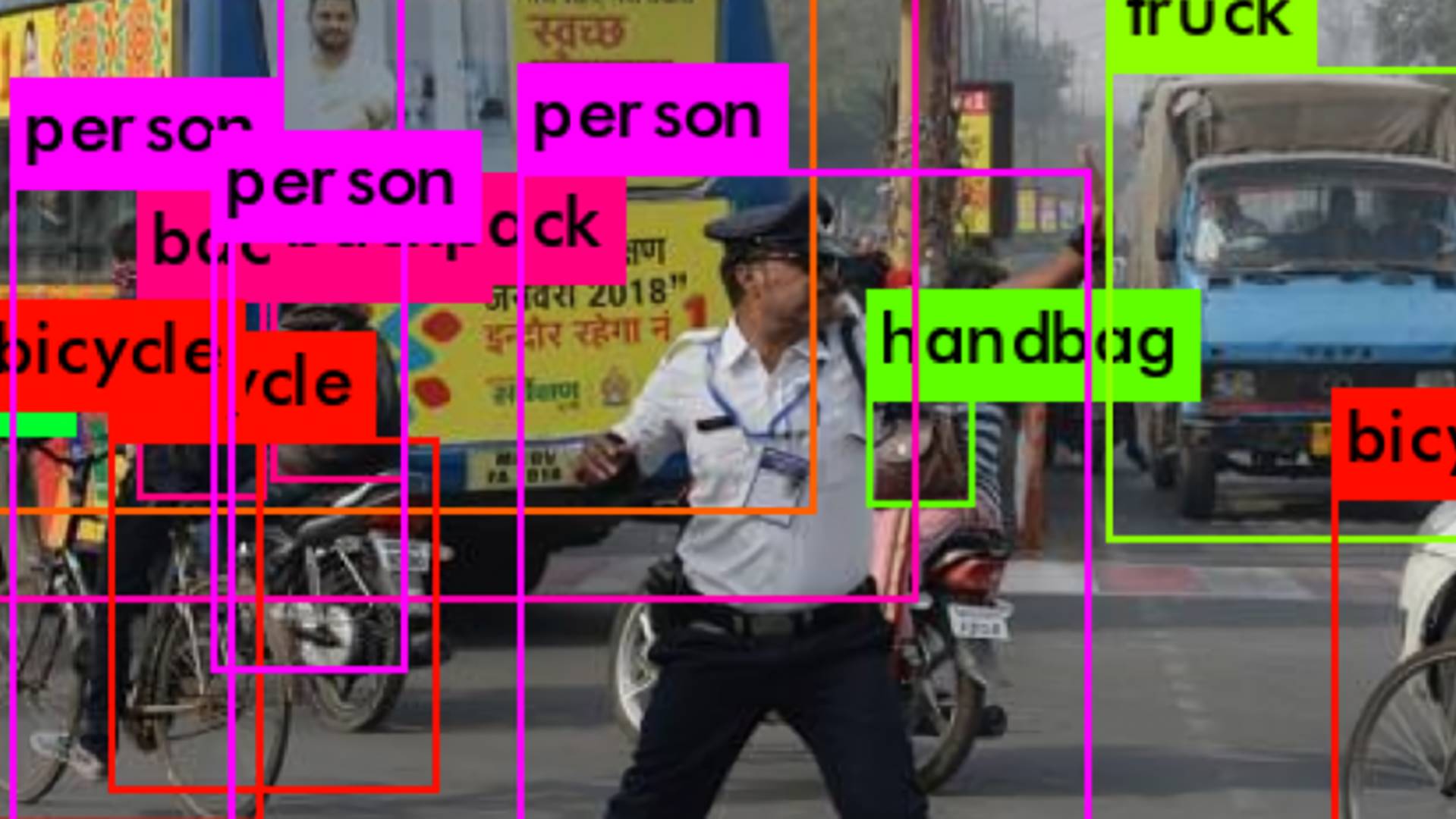

The perils of big datasets also are established inside the laptop imaginative and prescient area, evidenced with the aid of using Stanford University researchers’ assertion in December 2019 they could get rid of offensive labels and photographs from ImageNet. The version StyleGAN, advanced with the aid of using Nvidia, additionally produced biased consequences after education on a big photo dataset. And following the invention of sexist and racist photographs and labels, creators of eighty Million Tiny Images apologized and requested engineers to delete and now no longer use the material.

Venturebeat.com / Tech.Conflict.Com