The Christchurch shooter and YouTube’s radicalization trap

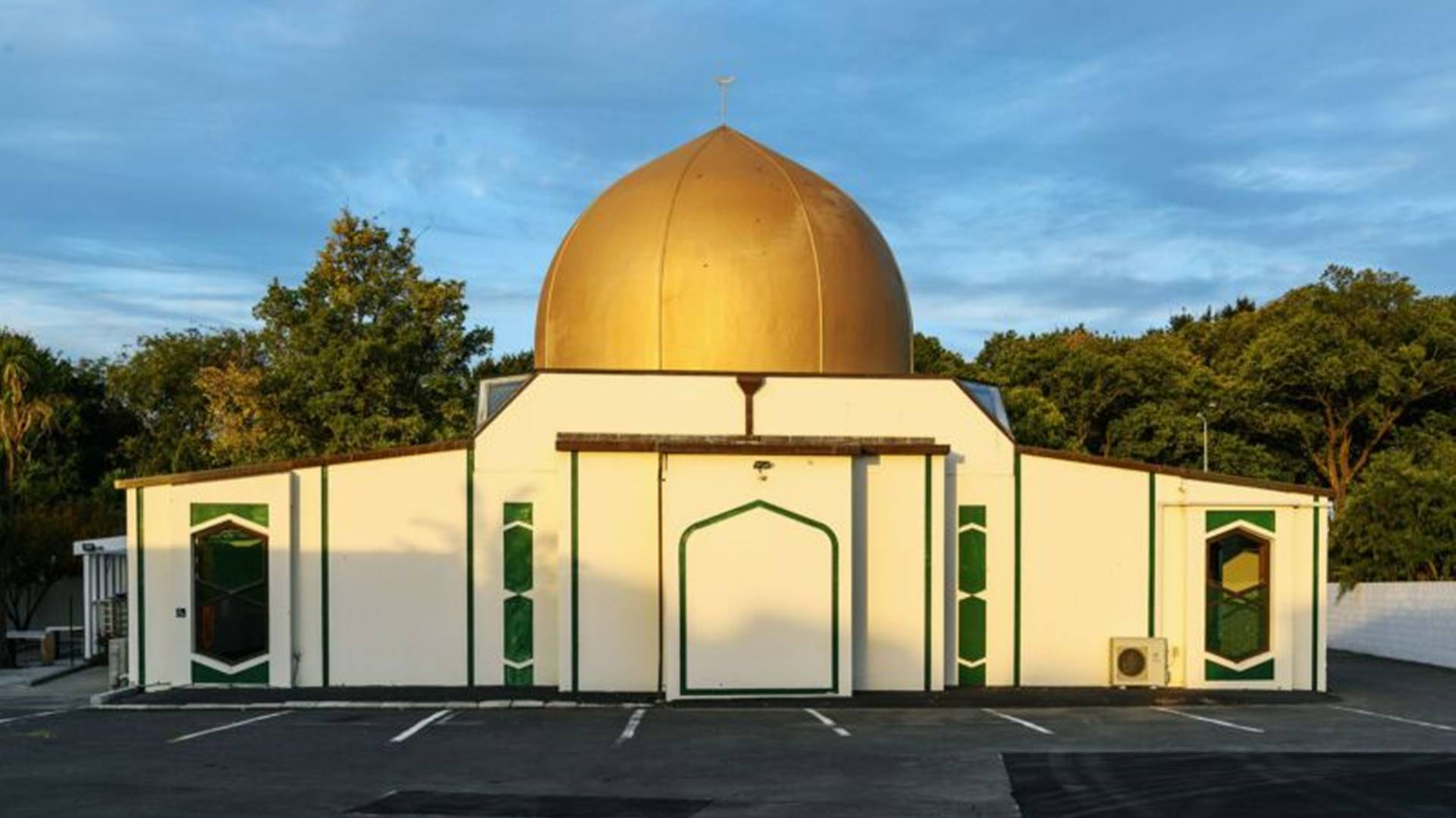

YouTube, Facebook, and different social media structures have been instrumental in radicalizing the terrorist who killed fifty-one worshippers in a March 2019 assault on New Zealand mosques, in keeping with a brand new record from the country’s authorities. Online radicalization professionals speak with WIRED say that whilst structures have cracked down on extremist content material when you consider that then, the essential commercial enterprise fashions at the back of pinnacle social media web sites nevertheless play a position in on-line radicalization.

According to the record, launched this week, the terrorist often watched extremist content material on-line and donated to corporations just like the Daily Stormer, a white supremacist web website online, and Stefan Molyneux’s some distance-proper Freedomain Radio. He additionally gave immediately to Austrian some distance-proper activist Martin Sellner. “The person claimed that he changed into now no longer a common commenter on severe proper-wing web sites and that YouTube changed into, for him, a miles extra vast supply of records and inspiration,” the record says.

The terrorist’s hobby in some distance-proper YouTubers and edgy boards like 8chan isn’t always a revelation. But till now, the information of his involvement with those on-line some distance-proper corporations have been now no longer public. Over a yr later, YouTube and different structures have taken steps closer to accepting duty for white supremacist content material that propagates on their websites, which include disposing of famous content material creators and hiring hundreds of extra moderators. Yet in keeping with professionals, till social media organizations open the lid on their black-field regulations or even algorithms, white supremacist propaganda will usually be some clicks away.

“The hassle is going some distance deeper than the identity and elimination of portions of difficult content material,” stated a New Zealand authorities spokesperson over email. “The identical algorithms that preserve humans tuned to the platform and eating marketing and marketing also can sell dangerous content material as soon as people have proven a hobby.”

Entirely unexceptional

The Christchurch attacker’s pathway to radicalization changed into absolutely unexceptional, say 3 professionals speak with WIRED who had reviewed the authorities record. He got here from a damaged domestic and from a younger age changed into uncovered to home violence, sickness, and suicide. He had unsupervised get admission to to a computer, in which he performed on-line video games and, at age 14, observed the net discussion board 4chan. The record information how he expressed racist thoughts at his school, and he changed into two times referred to as in to talk with its anti-racism touch officer concerning anti-Semitism. The record describes him as any person with “confined private engagement,” which “left widespread scope for having an impact on from severe proper-wing material, which he located at the net and in books.” Aside from more than one year operating as a private trainer, he had no constant employment.

The terrorist’s mom advised the Australian Federal Police that her issues grew in early 2017. “She remembered him speaking approximately how the Western international changed into coming to an give up due to the fact Muslim migrants have been coming again into Europe and could out-breed Europeans,” the record says. The terrorist’s pals and own circle of relatives furnished narratives of his radicalization which are supported through his net activity: shared hyperlinks, donations, feedback. While he changed into now no longer a common poster on proper-wing web sites, he spent adequate time inside the extremist corners of YouTube.

A damning 2018 record through Stanford researcher and Ph.D. candidate Becca Lewis describes the opportunity media machine on YouTube that fed younger visitors some distance-proper propaganda. This community of channels, which vary from mainstream conservatives and libertarians to overt white nationalists, collaborated with every different, funneling visitors into more and more severe content material streams. She factors to Stefan Molyneux as an example. “He’s been proven time and time once more to be a critical vector factor for humans’ radicalization,” she says. “He claimed there have been medical variations among the races and promoted debunked pseudoscience. But due to the fact he wasn’t a self-recognized or overt neo-Nazi, he has become embraced through extra mainstream humans with extra mainstream structures.” YouTube eliminated Molyneux’s channel in June of this yr.

This “step-ladder of amplification” is in element a byproduct of the commercial enterprise version for YouTube creators, says Lewis. Revenue is immediately tied to viewership, and publicity is currency. While those networks of creators performed off every different’s fan base, the pressure to advantage extra visitors additionally incentivized them to put up more and more inflammatory and incendiary content material. “One of the maximum traumatic matters I located changed into now no longer simplest proof that audiences have been getting radicalized, however additionally statistics that actually confirmed creators getting extra radical of their content material over time,” she says.

Related Posts

Making “vast development”?

In an email statement, a YouTube spokesperson says that the business enterprise has made “vast development in our paintings to fight hate speech on YouTube because of the tragic assault at Christchurch.” Citing 2019’s bolstered hate speech policy, the spokesperson says that there was a “5x spike within the quantity of hate motion pictures eliminated from YouTube.” YouTube has additionally altered its advice machine to “restrict the unfold of borderline content material.”

YouTube says that of the 1.eight million channels terminated for violating its regulations final quarter, 54,000 have been for hate speech—the maximum ever. YouTube additionally eliminated extra than 9,000 channels and 200,000 motion pictures for violating regulations towards selling violent extremism. In addition to Molyneux, YouTube’s June bans covered David Duke and Richard Spencer. (The Christchurch terrorist donated to the National Policy Institute, which Spencer runs.) For its element, Facebook says it has banned over 250 white supremacist organizations from its structures and bolstered its risky people and organizations policy.

“It’s clear that the middle of the commercial enterprise version has an effect on permitting this content material to develop and thrive,” says Lewis. “They’ve tweaked their set of rules, they’ve kicked a few humans off the platform, however, they haven’t addressed that underlying issue.”

Online subculture does now no longer start and give up with YouTube or everywhere else, through design. Fundamental to the social media commercial enterprise version is cross-platform sharing. “YouTube isn’t simply an area in which humans cross for entertainment; they get sucked into those communities. Those permit you to take part thru comment, sure, however additionally through making donations and boosting the content material in different places,” says Joan Donovan, studies director of Harvard University’s Shorenstein Center on Media, Politics, and Public Policy. According to the New Zealand authorities record, the Christchurch terrorist often shared some distance-proper Reddit posts, Wikipedia pages, and YouTube motion pictures, which include in an unnamed gaming web website online chat.

Fitting in

The Christchurch mosque terrorist additionally observed and published on numerous white nationalist Facebook organizations, every now and then making threatening feedback approximately immigrants and minorities. According to the record authors who interviewed him, “the person did now no longer take delivery of that his feedback could have been of the issue to counter-terrorism agencies. The idea this due to the very huge quantity of comparable feedback that may be located at the net.” (At the identical time, he did take steps to reduce his virtual footprint, which includes deleting emails and disposing of his computer’s tough pressure.)

Reposting or proselytizing white supremacists without context or warning says Donovan, paves a frictionless avenue for the unfolds of fringe thoughts. “We ought to examine how those structures offer the potential for broadcast and for scale that, unfortunately, have now commenced to serve terrible ends,” she says.

YouTube’s commercial enterprise incentives unavoidably stymie that type of transparency. There aren’t awesome methods for outdoor professionals to evaluate or examine strategies for minimizing the unfold of extremism cross-platform. They regularly should depend as a substitute on reviews positioned out through the corporations approximately their personal structures. Daniel Kelley, accomplice director of the Anti-Defamation League’s Center for Technology and Society, says that whilst YouTube reviews a boom in extremist content material takedowns, the degree doesn’t talk to its beyond or contemporary prevalence. Researchers outdoor business enterprises don’t realize how the advice set of rules labored before, the way it changed, the way it works now, and what the impact is. And they don’t realize how “borderline content material” is defined—an critical factor thinking about that many argue it is still universal throughout YouTube, Facebook, and elsewhere.

Questionable results

“It’s tough to mention whether or not their attempt has paid off,” says Kelley. “We don’t have any records on whether or not it’s clearly operating or now no longer.” The ADL has consulted with YouTube, but Kelley says he hasn’t visible any files on the way it defines extremism or trains content material moderators on it.

An actual reckoning over the unfold of extremist content material has incentivized massive tech to position massive cash on locating solutions. Throwing moderation on the hassle seems effective. How many banned YouTubers have withered away in obscurity? But moderation doesn’t deal with the methods wherein the rules of social media as a commercial enterprise—developing influencers, cross-platform sharing, and black-field regulations—also are crucial elements in perpetuating hate on-line.

Many of the YouTube hyperlink the Christchurch shooter shared had been eliminated for breaching YouTube’s moderation regulations. The networks of humans and ideologies engineered via them and via different social media persist.

Arstechnica.com / TechConflict.Com