The vast ability and demanding situations of multimodal AI

Unlike maximum AI structures, human beings recognize the means of textual content, movies, audio, and snapshots collectively in context. For example, given textual content and a photograph that appear harmless while taken into consideration apart (e.g., “Look what number of humans love you” and an image of a barren desert), humans understand that those factors tackle probably hurtful connotations while they’re paired or juxtaposed.

While structures able to making those multimodal inferences continue to be a past reach, there’s been developed. New studies during the last 12 months have superior the modern-day in multimodal mastering, in particular, inside the subfield of visible query answering (VQA), a pc imaginative and prescient assignment wherein a device is given a textual content-primarily based totally query approximately a photograph and ought to infer the solution. As it turns out, multimodal mastering can deliver complementary data or tendencies, which frequently simplest come to be obtrusive while they’re all covered inside the mastering process. And this holds promise for programs from captioning to translating comedian books into one-of-a-kind languages.

Multimodal demanding situations

In multimodal structures, pc imaginative and prescient and herbal language processing fashions are educated collectively on datasets to examine a blended embedding area or an area occupied with the aid of using variables representing unique functions of the snapshots, textual content, and different media. If one-of-a-kind phrases are paired with comparable snapshots, those phrases are possibly used to explain the equal matters or items, at the same time as if a few phrases seem subsequent to one-of-a-kind snapshots, this means those snapshots constitute the equal item. It ought to be possible, then, for a multimodal device to expect such things as photograph items from textual content descriptions, and a frame of educational literature has confirmed this to be the case.

Related Posts

There’s simply one hassle: Multimodal structures notoriously choose up on biases in datasets. The range of questions and ideas worried in obligations like VQA, in addition to the dearth of incredible facts, frequently save you fashions from mastering to “reason,” main them to make knowledgeable guesses with the aid of using counting on dataset statistics.

Key insights would possibly lie in a benchmark check evolved with the aid of using scientists at Orange Labs and Institut National des Sciences Appliquées de Lyon. Claiming that the usual metric for measuring VQA version accuracy is misleading, they provide an alternative GQA-OOD, which evaluates overall performance on questions whose solutions can’t be inferred without reasoning. In an observation related to 7 VQA fashions and three bias-discount strategies, the researchers determined that the fashions didn’t deal with questions related to rare concepts, suggesting that there are paintings to be accomplished in this area.

The answer will possibly contain larger, greater complete education datasets. A paper posted with the aid of using engineers at École Normale Supérieure in Paris, Inria Paris, and the Czech Institute of Informatics, Robotics, and Cybernetics proposes a VQA dataset constructed from hundreds of thousands of narrated movies. Consisting of routinely generated pairs of questions and solutions from transcribed movies, the dataset gets rid of the want for guide annotation at the same time as allowing sturdy overall performance on famous benchmarks, in step with the researchers. (Most gadget mastering fashions discover ways to make predictions from facts categorized routinely or with the aid of using hand.)

In tandem with higher datasets, new education strategies may additionally assist to enhance multimodal device overall performance. Earlier this 12 months, researchers at Microsoft and the University of Rochester co-authored a paper describing a pipeline geared toward enhancing the analyzing and know-how of textual content in snapshots for query answering and photograph caption generation. In comparison with traditional imaginative and prescient-language pretraining, which frequently fails to seize textual content and its dating with visuals, their technique carries textual content generated from optical man or woman reputation engines all through the pretraining process.

Three pretraining obligations and a dataset of 1.four million photograph-textual content pairs facilitate VQA fashions examine a higher-aligned illustration among phrases and items, in step with the researchers. “We locate it in particular vital to encompass the detected scene textual content phrases as greater language inputs,” they wrote. “The greater scene textual content modality, collectively with the particularly designed pre-education steps, efficiently facilitates the version examines a higher aligned illustration most of the 3 modalities: textual content word, visible item, and scene textual content.”

Beyond natural VQA structures, promising tactics are rising inside the communicate-pushed multimodal domain. Researchers at Facebook, the Allen Institute for AI, SRI International, Oregon State University, and the Georgia Institute of Technology propose “conversation without conversation,” an undertaking that calls for visually grounded communication fashions to evolve to new obligations at the same time as now no longer forgetting how to speak with humans. For its part, Facebook lately delivered Situated Interactive MultiModal Conversations, a studies course geared toward education AI chatbots that take moves like displaying an item and explaining what it’s fabricated from in reaction to snapshots, recollections of preceding interactions, and personal requests.

Real-international programs

Assuming the limitations in the manner of performant multimodal structures are in the end overcome, what are the real-international programs?

With its visible communication device, Facebook might appear like pursuing a virtual assistant that emulates human companions with the aid of using responding to snapshots, messages, and messages approximately snapshot as evidently as someone would possibly. For example, given the prompt “I need to shop for a few chairs — display my brown ones and inform me approximately the materials,” the assistant would possibly respond with a photograph of brown chairs and the textual content “How do you want those? They have a strong brown color with a foam fitting.”

Separately, Facebook is operating in the direction of a device that could routinely discover hateful memes on its platform. In May, it released the Hateful Memes Challenge, a competition geared toward spurring researchers to expand structures that could perceive memes supposed to harm humans. The first section of the one-12 months contests lately crossed the midway mark with over 3,000 entries from loads of groups across the international.

At Microsoft, a handful of researchers are centered on the assignment of making use of multimodal structures for video captioning. A crew hailing from Microsoft Research Asia and Harbin Institute of Technology created a device that learns to seize representations amongst feedback, video, and audio, allowing it to deliver captions or feedback applicable to scenes in movies. In separate paintings, Microsoft coauthors special a version — Multitask Multilingual Multimodal Pretrained version — that learns established representations of items expressed in one-of-a-kind languages, permitting it to gain modern-day outcomes in obligations inclusive of multilingual photograph captioning.

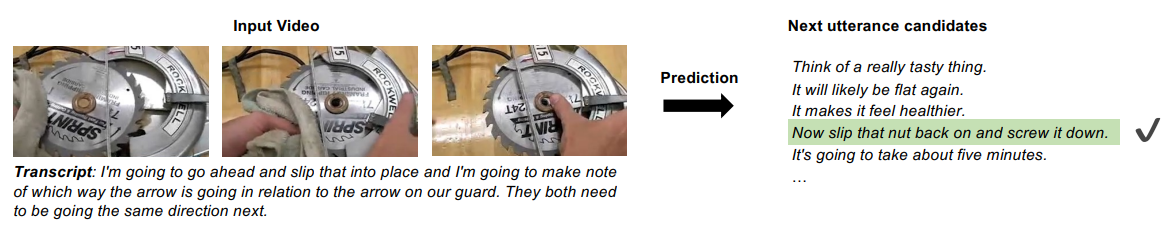

Meanwhile, researchers at Google lately tackled the hassle of predicting subsequent traces of discussion in a video. They declare that with a dataset of tutorial movies scraped from the web, they have been capable of teaching a multimodal device to expect what a narrator might say subsequent. For example, given frames from a scene and the transcript “I’m going to move beforehand and slip that into the vicinity and I’m going to make note … of which manner the arrow goes in terms of the arrow on our guard. They each want to be going the equal course subsequent,” the version should efficaciously expect “Now slip that nut returned on and screw it down” as the following phrase.

“Imagine which you are cooking a complicated meal, however, neglect about the following step in the recipe — or solving your automobile and unsure approximately which device to choose up subsequent,” the coauthors of the Google observe wrote. “Developing a wise communicate device that now no longer simplest emulates human communique, however additionally predicts and shows destiny moves — now no longer to say is capable of solution questions about complicated obligations and topics — has lengthy been a moonshot intention for the AI community? Conversational AI permits human beings to have interaction with structures in free-shape herbal language.”

Another fascinating observation proposes the usage of multimodal structures to translate manga, the shape of a Japanese comedian, into different languages. Scientists at Yahoo! Japan, the University of Tokyo, and gadget translation startup Mantra prototyped a device that interprets texts in speech bubbles that couldn’t be translated without context data (e.g., texts in different speech bubbles, the gender of speakers). Given a manga web page, the device routinely interprets the texts at the web page into English and replaces the unique texts with the translated ones.

Future paintings

At VentureBeat’s Transform 2020 conference, as a part of a communique approximately tendencies for AI assistants, Prem Natarajan, Amazon head of product and VP of Alexa AI and NLP, and Barak Turovsky, Google AI director of product for the NLU crew, agreed that studies into multimodality may be of crucial significance going forward. Turovsky pointed out advances in surfacing the restrained range of solutions voice on my own can provide. Without a screen, he pointed out, there’s no limitless scroll or first web page of Google seek outcomes, and so responses ought to be restrained to 3 ability outcomes, tops. For each Amazon and Google, this indicates constructing clever shows and emphasizing AI assistants that could each percentage visible content material and reply with voice.

Turovsky and Natarajan aren’t the simplest ones who see destiny in multimodality, in spite of its demanding situations. OpenAI is reportedly growing a multimodal device educated on snapshots, textual content, and different facts the usage of large computational assets the company’s management believes is the maximum promising route in the direction of AGI, or AI that could examine any assignment a human can. And in a communique with VentureBeat in January, Google AI leader Jeff Dean expected development in multimodal structures within the years beforehand. The development of multimodal structures should cause some of the blessings for photograph reputation and language fashions, he said, inclusive of greater sturdy inference from fashions receiving enter from greater than an unmarried medium.

“That complete studies thread, I think, has been pretty fruitful in phrases of really yielding gadget mastering fashions that [let us now] do greater state-of-the-art NLP obligations than we used so that it will do,” Dean informed VentureBeat. “[But] we’d nevertheless like so that it will do a lot greater contextual forms of fashions.”

Venturebeat / TechConflict.Com